There is absolutely nothing fun about the word “audit”.

Yet in the AI era content maintenance is a critical part of a successful organic marketing strategy.

We recently analysed over 50,000 pieces of B2B content and it showed the extent to which brands are accumulating content debt. Content velocity has increased significantly in the last 3 years, and brands are leveraging this to build “always on” short-form content engines. The challenge is that just 7% of the +50k pieces of content we studied are “evergreen”, meaning that the other 93% are either out-of-date or will be soon.

This is what we mean by content debt. Every time you expand the surface area of your content library, you take on a maintenance debt that must be paid. This is not to say that harnessing AI to increase content velocity is a bad strategy, but it should raise the bar of what content is worth publishing, and brands must build an ongoing strategy to identify and service content debt.

For starters, failure to do so is a huge waste. 80% of B2B content goes completely unused – that’s a lot of wasted energy and missed opportunity, when much of that library could either be repurposed into valuable content, or removed.

More importantly, content debt is becoming an active problem. LLMs form their perception of your brand – who you are, who you’re for, and when to recommend you – not just based on your shiny new blog posts, but based on your entire library. Where old content used to gather dust, it now creates currency and consistency issues across your library that can harm your brand’s authority and dilute its positioning in the eyes of these models.

The thought of auditing what could be thousands of pages is a daunting prospect. It’s a tedious, boring, manual job typically reserved for an occasional “spring clean”. Luckily, Demand-Genius’ AI can help you stay on top of your entire library, no matter how big, and build a simple automated process to keep it all on brand and message – not to mention finding quick wins and opportunities!

Whether you’re auditing 50 blog posts, 500 or 11,000 (our current record!), this article will help you construct prompts that generate deep insights that can make an impact, in minutes.

Demand-Genius AI Content Audits

As soon as you sign up, Demand-Genius’ AI will build and maintain a complete directory of your content. To get you started, we’ll classify that content in terms of Content Type, Topic, Audience, Funnel Stage (and more) so you can get a birds-eye view of your content strategy.

Most importantly, though, you can use our AI to run deep, human-like analysis of your content at scale. This lets you tailor your Content Audit to your business (ICP, Persona, Products) and analyse anything you want, to improve SEO, GEO, Brand Voice or just make your content more persuasive and effective.

9 Proven Prompt Templates

Here are 9 prompts you can copy, paste, and customise right now (also available via the Demand-Genius Template Library!). These should also help as inspiration for other ways you can use our AI columns!

| Use Case | Purpose | Prompt |

|---|---|---|

| Key Language Usage | Brand |

Task: Check whether this content includes any of the brand’s approved key language elements or deprecated terminology.

Reference: [Insert Key Language List or Deprecated Terminology Here] (e.g., active brand phrases, taglines, preferred terminology, or legacy terms to avoid) Categories: • Yes — One or more specified key terms or phrases appear in context. • No — None of the provided language or terminology is detected. Instruction: • Compare the text against the provided list of active or legacy brand language. • Match exact or close variants, ignoring case sensitivity. • Count each term only once even if repeated. • Return one label only: Yes or No. |

| Message Pillar Mapping | Brand |

Task: Map this content to the single most relevant message pillar from the list provided.

Reference: [Insert Message Pillar Framework Here] (e.g., “Product Innovation”, “Customer Outcomes”, “Market Leadership”, etc.) Categories: • Pillar A — [Insert Label] • Pillar B — [Insert Label] • Pillar C — [Insert Label] • Pillar D — [Insert Label] (Adjust based on your actual framework.) Instruction: • Read the full content and identify the dominant strategic message it reinforces. • Prioritise what the piece is trying to prove or highlight, rather than surface-level topics. • Select the single pillar that best fits the overall narrative and business objective. • Return one label only, using the exact pillar name. |

| Subject Matter Expertise | Persuasion |

Task: Classify the level of subject matter expertise demonstrated in this content.

Categories: • Beginner — Basic explanations, introductory concepts, or high-level overviews with little depth. • Intermediate — Practical detail, some nuance, and evidence of applied knowledge or experience. • Expert — Deep domain insight, advanced concepts, frameworks, or original perspectives that suggest strong authority. Instruction: • Focus on the depth of explanation, precision of language, and use of concrete examples or frameworks. • Consider whether a knowledgeable reader would learn something genuinely new or nuanced. • Return one label only: Beginner, Intermediate, or Expert. |

| Source Attribution Assessment | GEO |

Task: Assess how well the content provides source attribution for claims, statistics, or quotes.

Categories: • Strong — Clear, specific attribution (e.g., named studies, reports, organisations, or experts) for most key claims. • Moderate — Some attribution is present, but key claims or statistics lack clear sources. • Weak — Little or no attribution; claims or data points are made without referencing sources. Instruction: • Look for explicit mentions of external sources (reports, surveys, companies, experts, etc.). • Evaluate whether the reader could reasonably verify the claims if they wanted to. • Return one label only: Strong, Moderate, or Weak. |

| FAQ Structure Presence | GEO |

Task: Determine whether this content includes an FAQ-style structure that mirrors real buyer questions.

Categories: • Present — Content clearly includes question-and-answer sections, headings phrased as questions, or an FAQ block. • Not Present — No obvious FAQ-style structure or question-led formatting. Instruction: • Scan for headings that are phrased as questions, or bullet lists structured as Q&A. • Ignore rhetorical questions within paragraphs unless they clearly structure the content. • Return one label only: Present or Not Present. |

| Featured Snippet Readiness | GEO |

Task: Evaluate whether this content is structured in a way that could win a search engine featured snippet for key queries.

Categories: • High — Clear, concise answers near the top; strong use of definitions, lists, or step-by-step explanations. • Medium — Some structured answers or lists, but not consistently optimised for snippets. • Low — Long, unstructured text with no obvious snippet-friendly sections. Instruction: • Check if key questions are answered in 1–3 concise sentences or clean lists. • Look for use of headings and formatting that isolate direct answers. • Return one label only: High, Medium, or Low. |

| Citation Friendliness | GEO |

Task: Assess how easily this content could be cited or referenced by other sites, analysts, or creators.

Categories: • Highly Citable — Contains unique data, frameworks, definitions, or quotable insights that others would want to reference. • Moderately Citable — Some useful insights or explanations, but not especially distinctive or original. • Low Citable Value — Mostly generic, derivative, or surface-level content with little that stands out as reference-worthy. Instruction: • Focus on originality, clarity of ideas, and presence of concrete takeaways. • Consider whether an external writer would plausibly link to or quote this content. • Return one label only: Highly Citable, Moderately Citable, or Low Citable Value. |

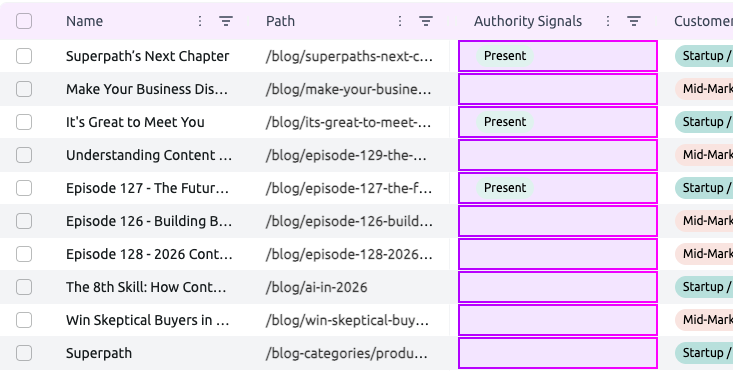

| Authority Signals | GEO |

Task: Identify whether this content includes visible authority signals that build trust.

Categories: • Strong — Includes multiple authority signals (e.g., named experts, case studies, recognised brands, certifications, or awards). • Moderate — Includes at least one clear authority signal. • Weak — No obvious authority signals present. Instruction: • Look for mentions of real customers, logos, results, or credentials that demonstrate credibility. • Ignore generic claims like “leading” or “best-in-class” unless they are backed by specific proof. • Return one label only: Strong, Moderate, or Weak. |

| Information Density | GEO |

Task: Assess the information density of this content — the ratio of factual or data-driven material to overall narrative.

Categories: • Low — Few verifiable facts; mostly narrative, opinion, or broad claims. • Medium — Mix of narrative and supporting detail (some data, examples, or specifics). • High — Dense with facts, statistics, or precise technical information. Instruction: • Decide whether the content clearly fits High or Low density first. • Choose Medium only if neither extreme fits confidently. • Return one label only: Low, Medium, or High. |

3 Principles of Effective Prompts

The difference between a useful content audit and a waste of time comes down to how you write your prompts.

A good framework for prompting is Task, Context, Output. What should the AI do? What context does it need? What output do I want?

Principle 1: Task – Be specific.

Vague prompts return vague results. Instead of asking AI to “analyse my content,” tell it exactly what to look for and why it matters.

Principle 2: Provide necessary Context

AI doesn’t know your business, audience, or goals unless you tell it. The more context you provide, the more relevant your results will be.

Include specific details like:

- Your target buyer persona details

- Brand voice guidelines

- Funnel stage definitions

- Competitive positioning

- Strategic priorities

Good Content is highly subjective, based on your business and audience. Make sure the AI knows that context to improve results.

Principle 3: Define Your Output Format

Remember, your results will live in a table. Design your prompts to return clean, consistent outputs that work well for filtering and analysis. The best prompts generate a response that can help you take action, at scale, rather than simply producing more work.

For tags: Use simple categories or scores. For example:

- “Return only: Keep, Kill or Improve”

- “Return either: on-brand or off-brand”

For notes: Ask for specific, structured information. For example:

- “List the top 3 missing elements”

- “Identify the primary sales use case”

Why this matters: Consistent formatting makes your audit data easy to understand.

You can use the output to quantify problems in a way that enables you to make strategic decisions confidently, and justify them with clear data if needed.

Tips to Improve Effectiveness and Save Credits

Start Simple, Then Iterate

Don’t try to create the perfect prompt on your first attempt. Start with a basic version and run it on a small sample of content. This helps you catch formatting issues, unclear instructions, or outputs that don’t match your expectations.

Iterate on your sample until you’re confident, then run the prompt against the whole directory.

Use Multiple Focused Prompts vs. One Complex Prompt

It’s tempting to create one mega-prompt that analyses everything, but focused prompts give better results and more useful data.

Instead of: “Score this content out of 10 for SEO, brand voice, sales value, and lead generation potential”

Use four separate prompts, each with specific criteria and output formats.

Decision-Oriented Prompts

Before writing a prompt, ask yourself: “What decision will I make with this data?” If you can’t answer that clearly, refine the prompt until you can.

Good prompts answer questions like:

- Which content should I update first?

- What can my sales team use right now?

- Where should I focus my next content creation efforts?

- What content could potentially be gated for lead generation?

Thinking this through before you run a prompt will help you take action on the data you generate, and avoid burning credits to get you to that point.

Want more help?

The 9 templates in this guide will get you started immediately, but the real power comes from customising prompts for your specific business needs. Use the principles and tips in this article as a starting point to test, iterate, and refine until your audit data directly informs your content decisions.

We’re always happy to help with this process, and always keen to hear about new use cases or challenges that our solution can solve.

Feel free to book a time with our Founder, Tom Rudnai, below to run through any questions or if you’d like help crafting a prompt for your use case.